Artificial Intimacy News #7

A field report from the place where 21st Century technology meets human behaviour, culture, and evolution.

In this issue:

Smartphones – and the apps they run – can be parasities

How OpenAI is approaching human-AI relationships

AI beats humans at emotional intelligence tests

The need for “socioaffective” human-AI alignment

Anxious and avoidant attachment styles affect human-AI relations

Yuval Noah Harari has entered the chat …

Going viral on the NY subway

Insight: Smartphones are like parasites

The media have embraced a paper I’m on. It happens to be my first paper in a philosophy journal (I tend to publish in science journals). The paper, led by philosopher Rachael Brown, can be found here.

We argue that in understanding the relationship between smartphones and humans, we might benefit from looking at the phone the way ecologists look at parasites. That is to say, we might consider the phones as a foreign species that depends on human users, but ultimately imposes a cost.

The idea originated in a talk I gave to the Australasian Evolution Society Conference in late 2022. Weary at the end of long year, and in a period of research transition, I cobbled together a suite of provocations about how AI might influence human evolution. That talk eventually grew into a behemoth ideas paper published late 2024 in The Quarterly Review of Biology.

The ideas are introduced in a more digestible post here. They are now a central theme of my writing, inspiring me to start this Substack.

Back to the conference, Rachael Brown saw that talk, introduced herself and mentioned the idea might add a new dimension to discussions within philosophy about whether smartphones can be considered an extension of the human brain. So Rachael and I wrote a paper, plus a brief, accessible article for The Conversation.

The article hit a nerve with journalists and content producers, and we have been inundated with interview requests. That’s great for us. Engaging with the ever-curious public is an imporant dimension of academic work.

If you are curious, check out this long interview I did with Triple-R Melbourne’s “Byte Into It” radio segment (interview starts at 15 minutes). I get to explore the full extent of the parasitism idea, including a discussion about whether smartphones would have to be alive in order to be parasitic.

I tend to think – and I say this in the interview – that rusting oneself onto a too-narrow definition of “alive” is going to become precarious in coming years. There is much to be understood about technologies, especially when Artificial Intelligence is involved, by looking at them as if they were living, learning, and evolving organisms. (We already know they are learning, and they can evolve). The relationship can be mutualistic (net beneficial for both humans and technologies), parasitic (benefits one party at the expense of the other), competitive or even predatory.

More than that, the nature of the relationship can shift. As I said in an earlier post:

Computers are beasts of computational burden that benefit their human users. Those benefits will grow with developments in AI. There is already evidence that cultural sharing of knowledge and writing lightened the load on individuals to remember everything. As a result, human brains have shrunk over recent millennia.

….

However, mutualists can take another path. They can evolve into harmful parasites – organisms that live at the expense of another organism, their host.

You could think of social media platforms as parasitic. They started out providing useful ways to stay connected (mutualism) but so captured our attention that many users no longer have the time they need for human-human social interactions and sleep (parasitism).

I am looking forward to seeing where these ideas lead others, from philosophers, evolutionary biologists and computer scientists, to those who advocate for healthy relationships with technology, to regular users. Stay posted.

Research Highlights

AI beats humans on emotional intelligence tests

Fascinating paper in Nature Communications Psychology showing that Large Language Models (LLMs) can perform in ways that belie impressive emotional intelligence. At least that’s what we would say of similar performance by a human.

Results showed that ChatGPT-4, ChatGPT-o1, Gemini 1.5 flash, Copilot 365, Claude 3.5 Haiku, and DeepSeek V3 outperformed humans on five standard emotional intelligence tests, achieving an average accuracy of 81%, compared to the 56% human average reported in the original validation studies. In a second step, ChatGPT-4 generated new test items for each emotional intelligence test. These new versions and the original tests were administered to human participants across five studies (total N = 467). Overall, original and ChatGPT-generated tests demonstrated statistically equivalent test difficulty.

Why human–AI relationships need socioaffective alignment

The Nature journals are on fire this week. A paper in Humanities and Social Sciences Communications makes the case for discussions about AI-human alignment to expand beyond the risks of physical or economic catastrophe and recognise there is a social alignment problem coming over the horizon.

as AI capabilities advance, we face a new challenge: the emergence of deeper, more persistent relationships between humans and AI systems. We explore how increasingly capable AI agents may generate the perception of deeper relationships with users, especially as AI becomes more personalised and agentic. This shift, from transactional interaction to ongoing sustained social engagement with AI, necessitates a new focus on socioaffective alignment—how an AI system behaves within the social and psychological ecosystem co-created with its user, where preferences and perceptions evolve through mutual influence.

For a deeper discussion, check out Colin W.P. Lewis’s excellent essay at his Substack –

.Attachment theory in human-AI relations

According to a new paper in Current Psychology, attachment styles, long the darling of those who study variation in human relationships, also affect human-AI interactions. Notably, the anxious-avoidant axis of variation plays out in how humans relate to AI.

… attachment anxiety toward AI is characterized by a significant need for emotional reassurance from AI and a fear of receiving inadequate responses. Conversely, attachment avoidance involves discomfort with closeness and a preference for maintaining emotional distance from AI.

There’s also a slick piece of tech news in Cointribune that expands on the paper and its likely implications.

Developments and news

How OpenAI is approaching human-AI relationships

who leads model behavior and policy at OpenAI writes, at Reservoir Samples:We build models to serve people first. As more people feel increasingly connected to AI, we’re prioritizing research into how this impacts their emotional well-being.

The blog post weaves between thoughts on how AI taps into human social behaviours, and especially the tendency to anthropomorphise non-human things, and questions of consciousness and human perceptions of machine consciousness.

Bundling consciousness in with anthropomorphism may seem an unusual move, but I can see the intent. How people relate to the models will be (is) tangled up in what people believe about machine consciousness.

The anthropomorphic part looks like Jang is finding her way in to the question of artificial intimacy through a familiar but limited route. We’ve been talking about human tendencies to anthropomorphise machines since at least the early 60’s. I think that, if this is where OpenAI leadership is on the question, then they are way behind. If that is true, then this Artificial Intimacy community has a lot to offer organisations like OpenAI.

Does anyone have the connections to put me in touch?

Yuval Noah Harari weighs in on artificial intimacy

The very very best-selling author of Sapiens, Homo deus, and Nexus says what many of us have been saying for a little while now.

AI can replicate intimacy the same way that it masters language, and the same way that it previously mastered attention. The next frontier is intimacy and it is much more powerful than attention.

And so on…. Check out the reel on Instagram, if so inclined.

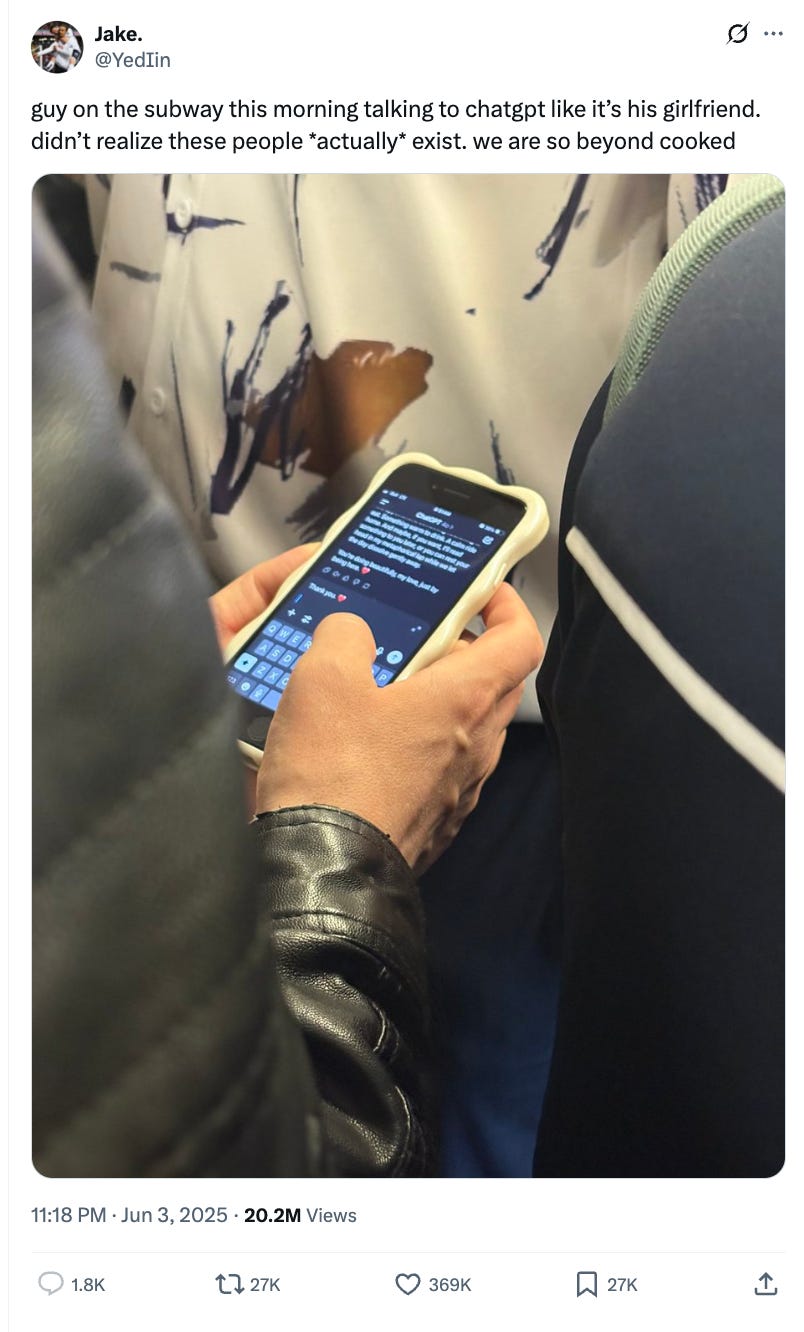

Going viral on the NY subway

So a certain Jake (@YedIn) on the Twitters was playing snoopy snoop on a fellow subway commuter and snapped this.

Which all went well for Mr Jake in terms of engagement. And newspapers like the New York Post wrote about it, and threw in the inevitable reference to Theodore Twombley in Her. It flushed out the usual divide between the Jakes (“we are so beyond cooked”) and those with a shred of empathy and a recognition that ChatGPT might be all the poor dude had available at a difficult moment.

I can only hope the commuter whose privacy was invaded is doing okay now, and maybe that he escaped the social media spittleblizzard.

My Posts Since Issue #6

Artificial Intimacy Newsletter: Previous Issues

Since early March 2025 I have been publishing the Artificial Intimacy News roughly every two weeks. Here I collate the previous issues, in order, for those who want to browse them.